Closed (minded) captions

Today I was tagged into a conversation on Twitter by New York Times best-selling author Morgan Jerkins, who had been watching an episode of The Cleveland Show and happened to have the closed captions on. She wrote:

I was expecting closed captions that were, at least, an attempt at accurately captioning what was said. Perhaps the transcriptionist misheard or misunderstood, but transcribing in good faith.

Instead, there was this:

The caption reads “dam-fa-foo-dun-may-hebeyad-shoot.”

That was followed by:

The caption reads “Naw-a-gah-may-mah-beyad, dayum.”

This raises an important question: what is the function of closed captions? Ostensibly, it’s so the viewers know what was said.

In this case, what was said was:

In IPA (this will be relevant later) that’s:

[dæ̃ fæ fuw dʌ̃ me͡ɪ hɪ beːʲɪʔ ʃːuʔ næ͡w ɑ͡ɪ gɑː me͡ɪk̚ mɑː beːʲɪd̥ deʲɪ̃ʰ]

The transcript should read “damn fat fool done made his bed? Shoot. Now I gotta make my bed. Damn.”

There are a couple of things happening here.

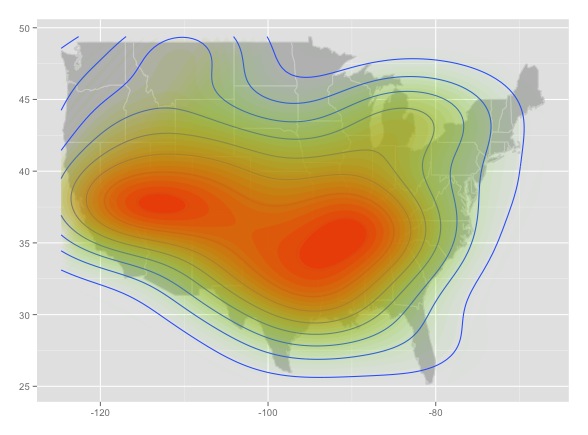

First, the character is a black character being voiced by a white voice actor, who does not, evidently, have early life contact with AAE speech communities necessary to speak it natively. He’s very good, but he also is not perfect, and it’s clear that while he’s nailed some of the harder parts of some black accents, he’s also missed some important nuance, overgeneralized some parts of the accent, and applied the wrong accent to the wrong place. He’s noticed that word final consonants are often deleted, unreleased, realized as glottal stops, or deleted altogether, but he has overgeneralized, and left no word final consonants in places where they should appear. In fact, it was his second word — [fæ] instead of [fæʔ] — that made me look up who was voicing the character — I’ve never heard an AAE speaker who would say fah for fat. He noticed that word final nasals (n, m, ng) are often pronounced as nasalization on the vowel, like in French, and not as a following segment. He also noticed that the vowel in bed is often split, so it sounds like the vowels in “play hid”. However, the show takes place in Stoolbend, Virginia, and this accent feature is not as common in Virginia AAE. It’s common in parts of the Carolinas, and from the Gulf to the Great Lakes, along the Mississippi, but not most of the mid-Atlantic or Northeast. He also overdoes the consonant deletion — this level of syllable coda deletion is only really plausible in Georgia. He also over does it with “gotta.” That kind of reduction does happen, but not exactly in that context: the word is too slow and too carefully pronounced, so it comes across as caricature.

Caricature brings me to the second: This is a white actor voicing a black character on a comedy show, where part of the humor is evidently making fun of how he speaks. It should not be controversial for me to plainly state that it looks a lot like minstrelsy. I’m not entirely clear on how Rallo Tubbs is significantly different from Amos ‘n Andy, or from Thomas D. Rice. Evidently, after the killing of George Floyd, even the voice actor realized it was probably a bad look, and he publicly announced he would not be voicing black characters anymore. Why George Floyd, but not Mike Brown or Emmett Till, changed his mind remains a mystery. He made it clear he doesn’t want to take work from Black voice actors, but I’m not sure if the broader context is clear to him, given that statement and, you know, the decade or so of him doing this work. As I mentioned in my replies on Twitter, it’s uncomfortably evocative, to me anyway, of Jim Crow in Dumbo. The crows are clearly a vaudeville/minstrel act, and clearly intended to be speaking AAE (“I-uh be done seen most ev’rything/when I seen an elephant fly!”). They’re also voiced by white actors in the 1950s, and the line between imitation as flattery and caricature as mockery is razor thin there (and they’re on the wrong side of that line anyway). We can say that they clearly have contact with AAE speakers, and that there’s clearly a certain level of respect, but at the end of the day they’re taking a job that a Black man simply could not have at that time, to play at the culture, music, and language for laughs. It’s no longer the case that a Black voice actor could never get the job (just look at the cast of the Cleveland Show), but there’s still a direct line from Al Jolson, through Amos ‘n’ Andy, through the crows in Dumbo, right up to the Cleveland Show.

Third, and most importantly for this discussion, there’s the captions on top of all of that. If you’re reading the captions to know what was said, you still don’t know what was said! What you get is that the character said something unintelligible. The way I look at it, there’s two plausible possibilities, neither of which is good: first, the transcriptionist couldn’t make sense of the utterance and did the best they could, assuming it was some kind of gibberish. Or Jive (note to self: write post about Airplane). Second, the transcriptionist thought it was more important to show that the character wasn’t speaking “right” than to actually, you know, transcribe what was said. That would explain why “done” was written as “dun”. They’re pronounced the same, but any time a writer chooses to write something like “eye dun tole yew” instead of “I done told you”, they’re not telling us much about how a character sounds, but they’re telling us a great deal about how we’re supposed to perceive that character. Is it really possible that the transcriptionist who had flawlessly transcribed up to that point could no longer tell from context that the character was talking about making his bed? That he said “now” — a recognizable word of English — and not “naw”?

This is a really interesting case to me, because it is in some ways very subtle. What has to be behind this choice, any way you slice it, is a certain often unstated linguistic ideology. Most of us were taught explicitly in school that writing takes precedence over speech, and that for both writing and speech, there is one correct way to do things, which coincidentally overlaps with how well educated, wealthy White people (but not White Ethnics!) speak. This manifests itself in all aspects of our society, from arguments about pronunciation, to whether something “is (really) a word.” Built into that ideology is that there is some reason why one way of doing things is better — clarity, logic, authority — and it’s never the truth: that the prestige variety exists based on social norms, not linguistic facts. Lastly, this ideology positions ways of speaking that are not “classroom” English as inferior (and lacking in clarity, logic, and authority). This captioning only makes sense if we recognize that the transcriptionist, the service running these captions unquestioningly (in this case, Hulu), and likely most of people involved in the show’s production either view AAE as unintelligible, as something that can function as the butt of a joke, or both. It’s a subtle form of anti-blackness that’s not necessarily predicated on overt or deep hostility. It’s casual.

That’s not to say that all nonstandard spellings are inherently racist, or offensive, or what have you. I’ve even written chapters on how people intentionally represent how they speak with novel spellings (as in “dis tew much”). But in this particular case, there’s no valid reason I can think of why turning on the captions on Hulu should result in “dam-fa-foo-dun”. And this is, weirdly, something you only really see with AAE, and some socially stigmatized varieties of English spoken by (generally poor) white people, like Appalachian English.

As a thought experiment, can you imagine what would happen if Downton Abbey were captioned this way?

“noaw mayde? noaw nah-nee? noaw valette eevun?”

“ihts nayntiyn twuntee sevun, wi-uh mahdun foake”

(If that wasn’t transparent to you, It was the first lines in the Downton Abbey movie trailer).

This was a very interesting counterpoint for me this week, as I’ve been reviewing transcripts of a deposition and I was blown away by the accuracy and professionalism of the court reporter. While mistranscription of AAE (and mock AAE!) is a systemic problem, it’s not a universal one.

I don’t know where people stand on this issue, but I know where I do. While I see mistranscriptions of AAE everywhere, from Netflix to Turner Classic Movies, this is different, in that it’s apparently intentional. We can do better than this.

-----

©Taylor Jones 2020

Have a question or comment? Share your thoughts below!